assess performace of a 'glmnet' object using test data.

assess.glmnet.RdGiven a test set, produce summary performance measures for the glmnet model(s)

assess.glmnet(object, newx = NULL, newy, weights = NULL, family = c("gaussian", "binomial", "poisson", "multinomial", "cox", "mgaussian"), ...) confusion.glmnet(object, newx = NULL, newy, family = c("binomial", "multinomial"), ...) roc.glmnet(object, newx = NULL, newy, ...)

Arguments

| object | Fitted |

|---|---|

| newx | If predictions are to made, these are the 'x' values. Required

for |

| newy | required argument for all functions; the new response values |

| weights | For observation weights for the test observations |

| family | The family of the model, in case predictions are passed in as 'object' |

| ... | additional arguments to |

Value

assess.glmnet produces a list of vectors of measures.

roc.glmnet a list of 'roc' two-column matrices, and

confusion.glmnet a list of tables. If a single prediction is

provided, or predictions are made from a CV object, the latter two drop the

list status and produce a single matrix or table.

Details

assess.glmnet produces all the different performance measures

provided by cv.glmnet for each of the families. A single vector, or a

matrix of predictions can be provided, or fitted model objects or CV

objects. In the case when the predictions are still to be made, the

... arguments allow, for example, 'offsets' and other prediction

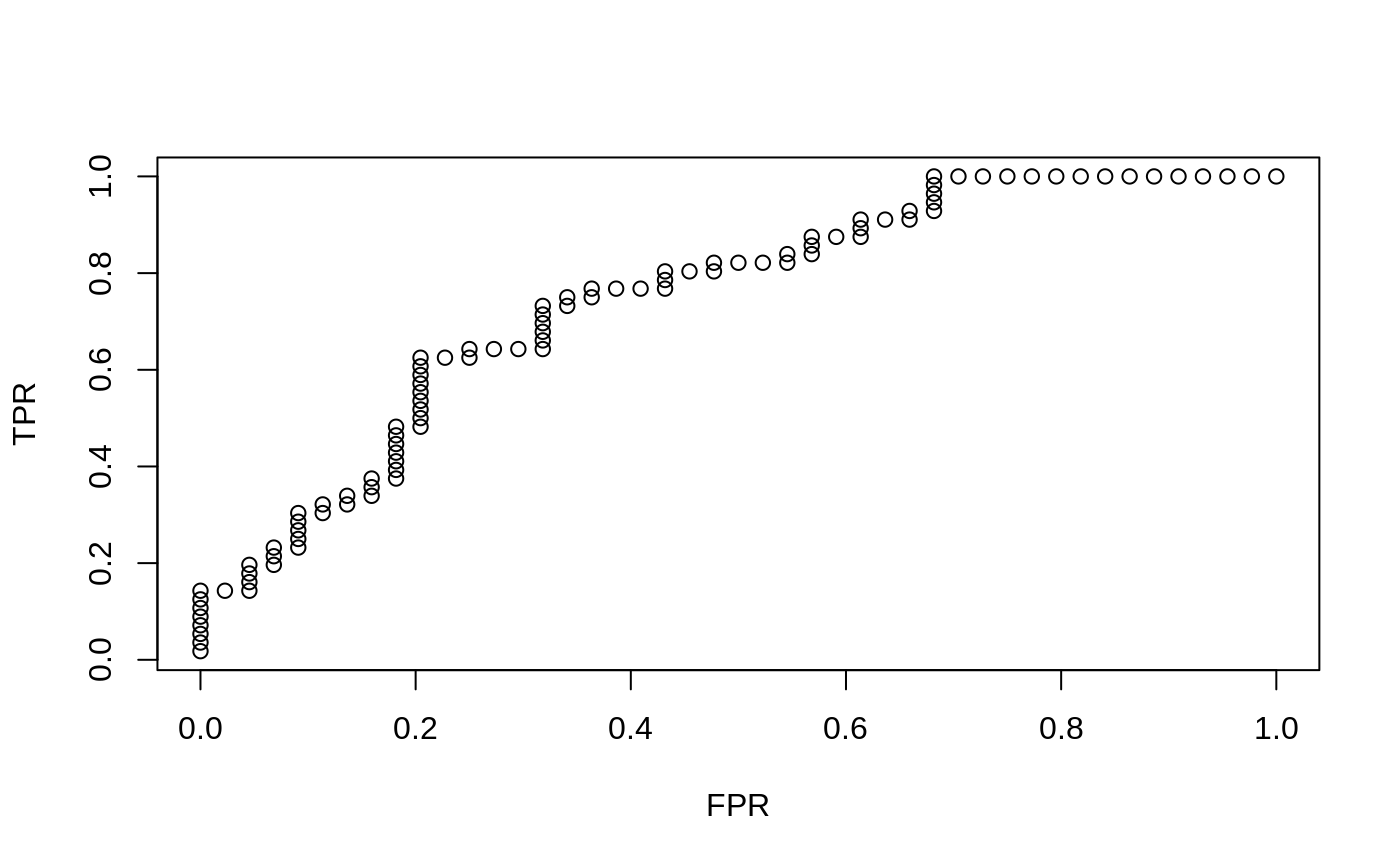

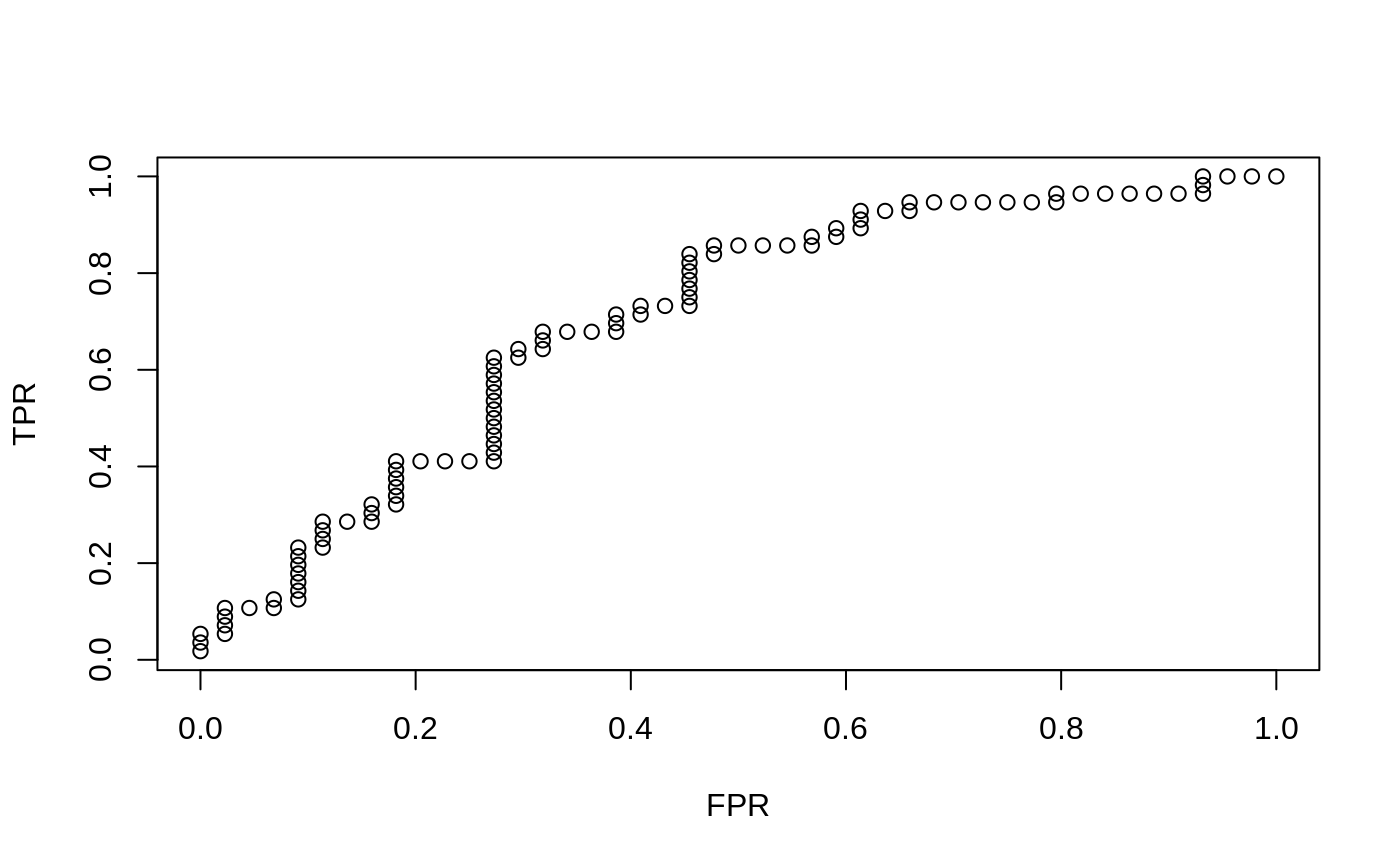

parameters such as values for 'gamma' for 'relaxed' fits. roc.glmnet

produces for a single vector a two column matrix with columns TPR and FPR

(true positive rate and false positive rate). This object can be plotted to

produce an ROC curve. If more than one predictions are called for, then a

list of such matrices is produced. confusion.glmnet produces a

confusion matrix tabulating the classification results. Again, a single

table or a list, with a print method.

See also

cv.glmnet and glmnet.measures

Examples

x = matrix(rnorm(100 * 20), 100, 20) y = rnorm(100) g2 = sample(1:2, 100, replace = TRUE) g4 = sample(1:4, 100, replace = TRUE) fit1 = glmnet(x, y) assess.glmnet(fit1, newx = x, newy = y)#> $mse #> s0 s1 s2 s3 s4 s5 s6 s7 #> 1.0256625 1.0186182 1.0127699 1.0079146 1.0009012 0.9944050 0.9881917 0.9785125 #> s8 s9 s10 s11 s12 s13 s14 s15 #> 0.9694334 0.9600505 0.9518679 0.9450459 0.9393820 0.9344261 0.9298835 0.9258736 #> s16 s17 s18 s19 s20 s21 s22 s23 #> 0.9218873 0.9177489 0.9143139 0.9114621 0.9090945 0.9069818 0.9051466 0.9035900 #> s24 s25 s26 s27 s28 s29 s30 s31 #> 0.9022983 0.9012258 0.9003355 0.8994700 0.8987397 0.8981355 0.8976339 0.8971869 #> s32 s33 s34 s35 s36 s37 s38 s39 #> 0.8968117 0.8965000 0.8962412 0.8960189 0.8957828 0.8955672 0.8953885 0.8952384 #> s40 s41 s42 s43 s44 s45 s46 s47 #> 0.8951138 0.8950103 0.8949244 0.8948531 0.8947939 0.8947447 0.8947035 0.8946660 #> s48 s49 s50 s51 s52 s53 s54 s55 #> 0.8946347 0.8946087 0.8945885 0.8945706 0.8945555 0.8945430 0.8945325 0.8945239 #> s56 s57 s58 s59 s60 s61 s62 s63 #> 0.8945167 0.8945107 0.8945058 0.8945017 0.8944983 0.8944954 0.8944931 0.8944911 #> s64 s65 s66 #> 0.8944895 0.8944882 0.8944870 #> attr(,"measure") #> [1] "Mean-Squared Error" #> #> $mae #> s0 s1 s2 s3 s4 s5 s6 s7 #> 0.7764511 0.7724494 0.7689227 0.7659417 0.7630279 0.7607338 0.7587392 0.7554860 #> s8 s9 s10 s11 s12 s13 s14 s15 #> 0.7522597 0.7506578 0.7496560 0.7488416 0.7484916 0.7483696 0.7480547 0.7476008 #> s16 s17 s18 s19 s20 s21 s22 s23 #> 0.7468598 0.7457893 0.7448148 0.7439268 0.7431178 0.7423436 0.7416206 0.7412384 #> s24 s25 s26 s27 s28 s29 s30 s31 #> 0.7408911 0.7405746 0.7402862 0.7398617 0.7394559 0.7390905 0.7387575 0.7384802 #> s32 s33 s34 s35 s36 s37 s38 s39 #> 0.7382580 0.7380551 0.7378702 0.7377018 0.7375338 0.7373768 0.7371878 0.7370234 #> s40 s41 s42 s43 s44 s45 s46 s47 #> 0.7368913 0.7368198 0.7367547 0.7366953 0.7366413 0.7365920 0.7365434 0.7364781 #> s48 s49 s50 s51 s52 s53 s54 s55 #> 0.7364195 0.7363662 0.7363252 0.7362965 0.7362801 0.7362652 0.7362515 0.7362391 #> s56 s57 s58 s59 s60 s61 s62 s63 #> 0.7362278 0.7362175 0.7362081 0.7361995 0.7361917 0.7361846 0.7361781 0.7361722 #> s64 s65 s66 #> 0.7361668 0.7361619 0.7361574 #> attr(,"measure") #> [1] "Mean Absolute Error" #>preds = predict(fit1, newx = x[1:20, ], s = c(0.01, 0.005)) assess.glmnet(preds, newy = y[1:20], family = "gaussian")#> $mse #> 1 2 #> 0.9443280 0.9348565 #> attr(,"measure") #> [1] "Mean-Squared Error" #> #> $mae #> 1 2 #> 0.7573680 0.7546214 #> attr(,"measure") #> [1] "Mean Absolute Error" #>fit2c = cv.glmnet(x, g2, family = "binomial") plot(roc.glmnet(fit2c, newx = x, newy = g2, s = "lambda.min"))fit3 = glmnet(x, g4, family = "multinomial") confusion.glmnet(fit3, newx = x[1:50, ], newy = g4[1:50], s = 0.01)#> True #> Predicted 1 2 3 4 Total #> 1 18 2 2 3 25 #> 2 0 9 0 0 9 #> 3 1 0 3 3 7 #> 4 1 1 1 6 9 #> Total 20 12 6 12 50 #> #> Percent Correct: 0.72